The Enterprise Nervous System

From improvisation to infrastructure: turn user sessions into identity-scoped tools—and decision traces—for AI.

The Problem

We built incredible brains. We gave them no hands.

The AI industry promised that autonomous agents would revolutionize work. They failed. A recent MIT study found that 95% of corporate AI pilots fail to deliver P&L value.

This isn’t a data problem. It’s a structure problem: we tried to automate jobs before we mapped them. We deployed backend agents into environments they couldn’t see, expecting them to navigate complex, undocumented, VPN-gated systems using APIs that don’t exist.

The Insight

Integration shouldn’t be about connecting servers. It should be about connecting the user.

Enterprises don’t need to rewrite their backends to support AI. They need to instrument the environment where work actually happens. Your users already bridge the gap between Salesforce, legacy ERPs, and internal tools every day—at the glass, in the browser, authenticated via SSO.

Char turns the user’s authenticated session into the universal API.

The Solution

We are building the nervous system for the AI-enabled workforce: an infrastructure layer that turns client environments into structured, addressable tools for AI.

This creates a staged path to automation:

Augment (copilot): The human works with AI assistance. Productivity improves immediately.

Verify (mapping): We capture the decision trace—what evidence was consulted, what constraints and approvals applied, what action was taken, and the outcome—and turn tribal knowledge into code.

Automate (driverless): Validated workflows graduate to controlled, scalable execution.

Most enterprise software can tell you what happened. Almost none can tell you why it happened at the moment it mattered. Over time, these decision traces compound into a context graph: a graph of decisions with evidence, constraints, and outcomes.

This becomes a system of record for decisions—not objects.

We start with prescribed structure—tool schemas and policy. The learned structure emerges from real workflows through decision traces, and that’s what compounds.

How It Works

The Universal Runtime

The runtime lives inside the user’s session. It wraps existing UI capabilities—a form submit, a modal open, a search filter—into standardized tools (WebMCP) without a backend rewrite.

useWebMCP({

name: "process_refund",

description: "Open the refund dialog for the current order",

handler: async () => {

setRefundModalOpen(true);

return { status: "opened_modal" };

},

});The Identity-Scoped Bus

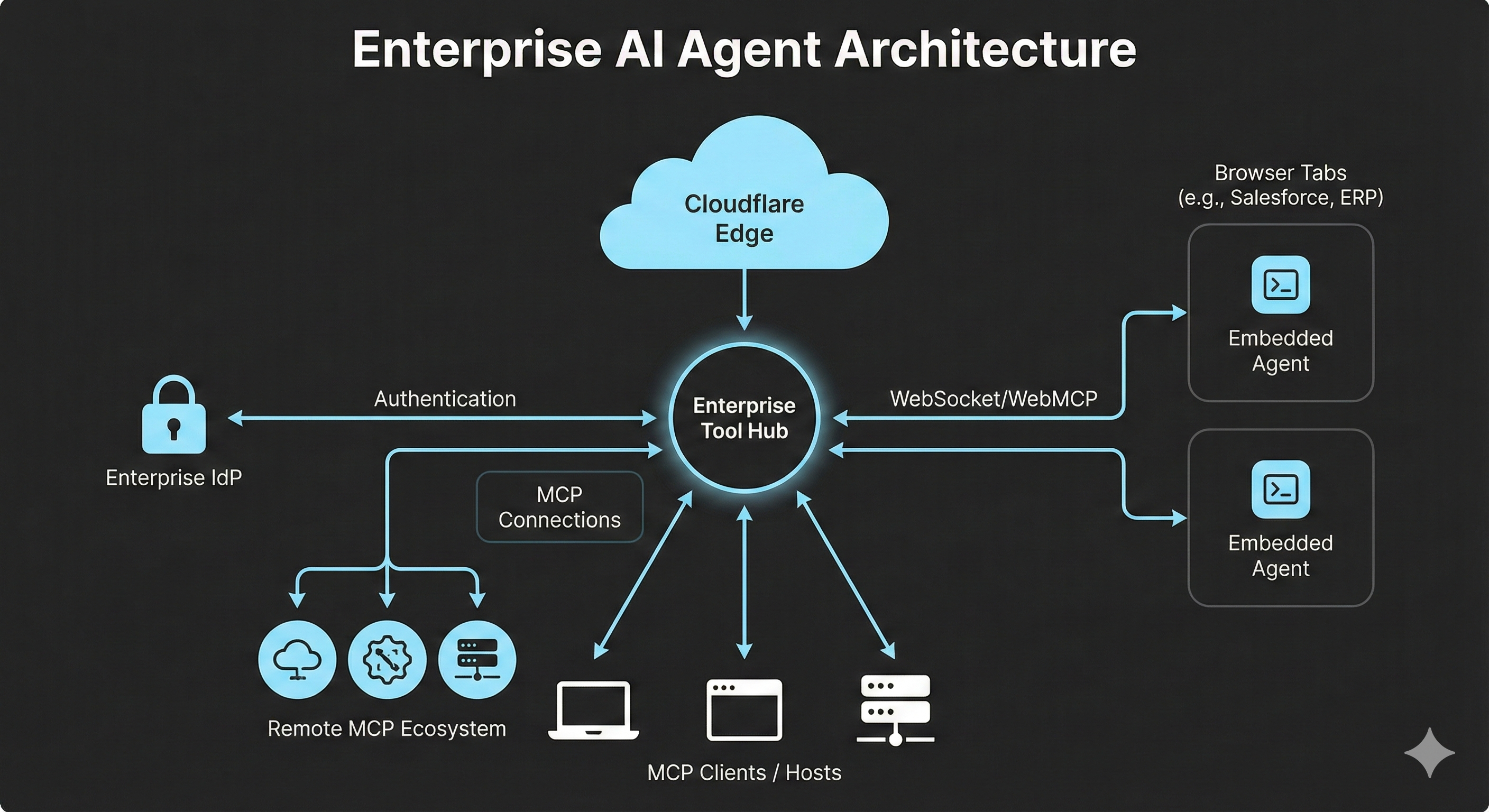

We maintain a stateful hub per identity that aggregates tools from connected apps and tabs into a single tool list, then routes calls back to the owning session. This enables cross-app interoperability: one user, one agent, many apps.

Because the hub sits in the execution path, it can capture decision traces by default: which tools were available at decision time, what context was fetched across systems, what approvals were required, what changed, and what the user corrected.

Model Sovereignty

We decouple the tools from the inference layer. Your tools stay inside the secure session while inference can be hosted or bring-your-own. The control plane stays portable across model vendors.

From Syntactic to Semantic Interoperability

For years, standards like FDC3 tried to connect enterprise apps through rigid, hard-coded contracts. When an API changed, the integration broke. Because our router consumes tool definitions at runtime, tools are discovered dynamically and small schema changes often work without a coordinated redeploy across every integration.

We can roll tool changes without redeploying agent code. Correctness is enforced by schemas and policy.

Why Now?

The protocol is ready. MCP standardizes tool definitions across languages, making polyglot interop possible.

Copilots need UI context. They need validation and approvals—they need to live at the session boundary.

Edge compute is mature. We can orchestrate multi-session state securely with edge primitives.

The Vision

We are not building a chatbot. We are building the universal glue.

We connect the entire enterprise stack—from the oldest legacy desktop app to the newest web dashboard—through the only thing they all share: the user.

The autonomous enterprise won’t be built by agents that bypass users, but by infrastructure that learns from them.

Technical Appendix

A technical sketch for engineering leads.

Architecture

Client Runtime (Session Host): Runs in the browser/WebView. Exposes UI capabilities as tools via WebMCP. Transport-agnostic: postMessage, WebSockets, or SSE.

Edge Orchestration Node (Universal Hub): Stateful per-user hub that aggregates tools and routes calls back to the owning session while preserving UI parity.

Model Control Plane: MCP over SSE for interoperability. Bring-your-own-model support with data sovereignty guarantees.

OAuth Remote MCP: The hub connects to remote MCP servers via OAuth so access is scoped, auditable, and revocable.

Decision traces (not just logs)

A decision trace is the missing layer between state and action: the evidence consulted, the constraints and approvals applied, the action taken, and the outcome. In our architecture, this can be recorded as structured metadata alongside tool calls and approvals so it’s replayable and learnable—not just a firehose of events.

Over time, these traces compile into a context graph: not a graph of nouns, but a graph of decisions with evidence, constraints, and outcomes.

Security and Identity

Security starts with explicit trust boundaries and least-privilege tools. We assume a hostile web (XSS, compromised scripts, prompt injection) and focus on limiting blast radius and making unsafe outcomes hard to execute.

Execution stays in the host application. Tools are deterministic JS handlers with schema validation and structured results, and they can reuse existing UI validation before any write.

The embedded runtime is a bridge, not a privilege escalator. When cross-origin, it cannot read the host DOM, cookies, or storage. It talks to the host over a hardened postMessage RPC channel with strict origin checks, targetOrigin, and a per-session handshake.

The Tool Hub is the policy authority. It is identity-scoped per org and user, aggregates tools, gates actions by policy and approvals, records decision traces, and provides a practical kill switch for connectors or tool classes.

Remote MCP servers are connected via OAuth so access is scoped, auditable, revocable, and never requires sharing raw credentials with the browser.

Inference is decoupled and policy-controlled. Sensitive data can be redacted or kept local, and customers can route inference to their own environments.

Roadmap

Zero-touch inspection: auto-generate WebMCP wrappers from frontend scans. Self-healing definitions: CI detects UI changes and regenerates tools to prevent drift.

Common Questions

Aren’t backend agents more reliable?

For workflows, yes. For copilots, the UI is often the source of truth for what’s possible right now.

Is it secure to run tools in the client?

Yes, if you treat the session as the permission boundary: tools are explicit and schema-validated, the runtime is cross-origin, and the hub enforces approvals and exfil policy.

What about latency?

Most copilot actions are local UI transitions plus user confirmation. Keeping execution in the session avoids an extra network hop.

What Is an Agent?

We define an agent simply: a system that uses an LLM to decide which code to run. A chatbot takes “help” and outputs text. An agent takes “refund this” and calls refund_tool(id=123). WebMCP provides the interface for that control flow to touch your application.